Secure your Azure Container Apps Environment (Part 02)

In the 2nd part of this 2 part series on securing Azure Container Apps Environment, we will look into a deployment example. We will make use of Azure Verified Modules as far as possible. Then we will cover some scenarios on how you can make this available to your development teams and how development teams can perform deployments of Azure Container Apps into the managed environment safely.

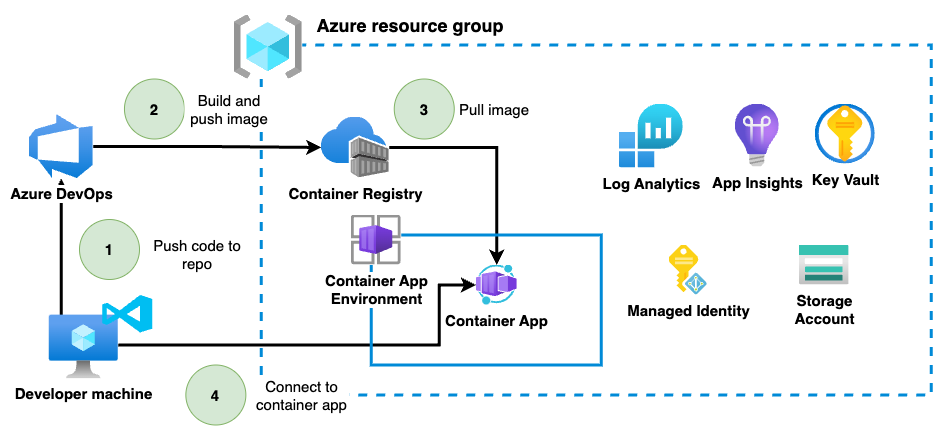

Container development process

Typically, the container development lifecycle would look something like the below.

In this deployment example, I will demonstrate the deployment of an Azure Container Apps Environment to facilitate this development approach. I will separate the deployment into the Container Apps Environment deployment and the Container Apps environment. I find this a useful separation of concern between the environment, which would mostly be managed by a core platform team and the Container Apps deployments which is mostly owned by development teams.

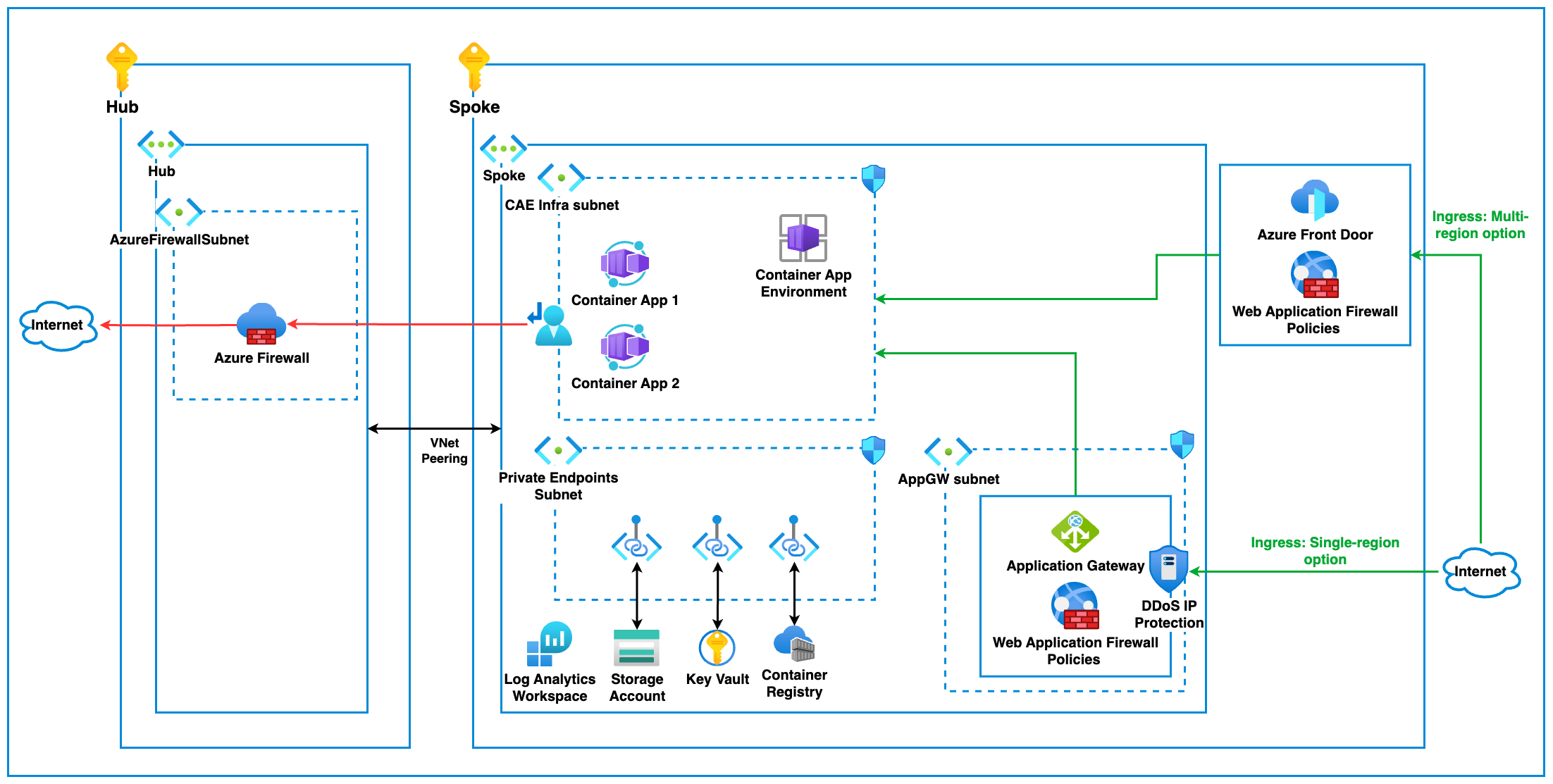

Deploying the Container Apps Environment

In order for the Azure Container Apps Environment to be usable, we need to deploy a number of supporting services and obviously deploy the Container Apps Environment as well most of which can be seen in the baseline diagram below.

For this deployment to work the following requirements must be in place:

- Azure Subscription

- Azure Resource Group

- Azure Virtual Network and Subnet

- Self-Hosted DevOps agents inside the VNet (If this is not possible, the Container registry needs to be publicly accessible)

I do not include the resource group, VNet and subnet in the deployment, as we mostly need to deploy to an existing landing zone in any case.

Deployed services in the pipeline deployment.

- Azure Key Vault

- Application Insights

- Log Analytics Workspace

- User-assigned Managed Identity

- Azure Container Registry

- Azure Container Apps Environment

The Azure Container App environment resources can be deployed using the deploy-aca-resources.yml pipeline in Azure DevOps.

name: deploy-aca-resources

parameters:

- name: environment

displayName: Choose the environment to deploy

type: string

default: dev

- name: whatIf

type: boolean

default: true

trigger: none

pool:

vmImage: ubuntu-latest

variables:

- template: ../variables/global.yml

- template: ../variables/env-${{ parameters.environment }}.yml

stages:

- stage: Deployment

displayName: Deploy Biceps

jobs:

- job:

displayName: Bicep deployment

steps:

- task: AzurePowerShell@5

displayName: Test bicep

inputs:

azurePowerShellVersion: LatestVersion

azureSubscription: $(serviceConnectionName)

pwsh: true

ScriptType: InlineScript

Inline: |

$Parameters = @{

ResourceGroupName = "$(resourceGroupName)"

TemplateFile = "$(srcRootFolder)/infra/main.bicep"

environment = "${{ parameters.environment }}"

Verbose = $True

}

Test-AzResourceGroupDeployment @Parameters

- task: AzurePowerShell@5

displayName: Deploy bicep

inputs:

azurePowerShellVersion: LatestVersion

azureSubscription: $(serviceConnectionName)

pwsh: true

ScriptType: InlineScript

Inline: |

$Parameters = @{

Name = "Deployment-$(build.buildId)"

ResourceGroupName = "$(resourceGroupName)"

TemplateFile = "$(srcRootFolder)/infra/main.bicep"

environment = "${{ parameters.environment }}"

Verbose = $True

WhatIf = $${{ parameters.whatIf }}

}

New-AzResourceGroupDeployment @Parameters

The deployment takes inputs from the following files:

- Infrastructure configuration from configs/cae.jsonc gets passed to the main.bicep.

- Pipeline variables from variables/global.yml and variables/env-dev.yml

And the infrastructure deployment is defined in the infra/main.bicep as seen below. Using only Azure Verified modules, there is no need to maintain custom bicep modules within the repo. When updates are made to Azure Verified modules, versions can be updated in the main.bicep only.

targetScope = 'resourceGroup'

@description('Suffix to append to deployment names, defaults to MMddHHmmss')

param timeStamp string = utcNow('MMddHHmmss')

param location string = resourceGroup().location

param env string = 'dev'

param config object = loadJsonContent('../configs/cae.jsonc')

var KeyVaultSecretsUser = subscriptionResourceId('Microsoft.Authorization/roleDefinitions', '4633458b-17de-408a-b874-0445c86b69e6')

var acrPullRole = subscriptionResourceId('Microsoft.Authorization/roleDefinitions', '7f951dda-4ed3-4680-a7ca-43fe172d538d')

var loggingObject = config[env].logging

module workspace 'br/public:avm/res/operational-insights/workspace:0.7.0' = {

name: 'deploy-${loggingObject.logAnalytics.name}-${timeStamp}'

params: {

// Required parameters

name: loggingObject.logAnalytics.name

// Non-required parameters

dailyQuotaGb: loggingObject.logAnalytics.dailyQuotaGb

location: location

managedIdentities: {

systemAssigned: true

}

publicNetworkAccessForIngestion: 'Disabled'

publicNetworkAccessForQuery: 'Disabled'

tags: config.tags

useResourcePermissions: true

}

}

module component 'br/public:avm/res/insights/component:0.4.1' = {

name: 'deploy-${loggingObject.ApplicationInsights.name}-${timeStamp}'

params: {

// Required parameters

name: loggingObject.ApplicationInsights.name

workspaceResourceId: workspace.outputs.resourceId

// Non-required parameters

retentionInDays: loggingObject.ApplicationInsights.retentionInDays

location: config.location

tags: config.tags

}

}

var keyVaultObject = config[env].keyVault

module vault 'br/public:avm/res/key-vault/vault:0.9.0' = {

scope: resourceGroup(keyVaultObject.resourceGroupName)

name: 'deploy-keyvault-${timeStamp}'

params: {

// Required parameters

name: keyVaultObject.name

// Non-required parameters

enablePurgeProtection: true

enableSoftDelete: true

softDeleteRetentionInDays: 7

enableRbacAuthorization: true

publicNetworkAccess: 'Disabled'

location: config.location

networkAcls: {

bypass: 'AzureServices'

defaultAction: 'Deny'

}

privateEndpoints: [

{

privateDnsZoneGroup: {

privateDnsZoneGroupConfigs: [

{

privateDnsZoneResourceId: keyVaultObject.privateDnsZoneResourceId

}

]

}

service: 'vault'

subnetResourceId: keyVaultObject.subnetResourceId

}

]

roleAssignments: [

{

principalId: containerAppServiceIdentity.outputs.principalId

principalType: 'ServicePrincipal'

roleDefinitionIdOrName: KeyVaultSecretsUser

}

]

}

}

var managedEnvironmentObject = config[env].managedEnvironment

module managedEnvironment 'br/public:avm/res/app/managed-environment:0.8.0' = {

name: 'deploy-${managedEnvironmentObject.name}-${timeStamp}'

params: {

// Required parameters

logAnalyticsWorkspaceResourceId: workspace.outputs.resourceId

name: managedEnvironmentObject.name

// Non-required parameters

infrastructureResourceGroupName: '${resourceGroup().name}-ace-infra'

infrastructureSubnetId: resourceId(managedEnvironmentObject.vnetResourceGroup, 'Microsoft.Network/virtualNetworks/subnets', managedEnvironmentObject.vnetName, managedEnvironmentObject.subnetName)

internal: true

location: config.location

tags: config.tags

workloadProfiles: managedEnvironmentObject.workloadProfiles

}

}

var privateDnsZoneObject = config[env].privateDnsZone

module privateDnsZone 'br/public:avm/res/network/private-dns-zone:0.6.0' = {

name: 'deploy-privateDnsZone-${timeStamp}'

scope: resourceGroup(privateDnsZoneObject.SubscriptionId, privateDnsZoneObject.resourceGroupName)

params: {

name: managedEnvironment.outputs.defaultDomain

a: [

{

aRecords: [

{

ipv4Address: managedEnvironment.outputs.staticIp

}

]

name: 'A_${managedEnvironment.outputs.staticIp}'

ttl: 3600

}

]

virtualNetworkLinks: [

{

registrationEnabled: false

virtualNetworkResourceId: resourceId(privateDnsZoneObject.linkedVnets[0].subscriptionId, privateDnsZoneObject.linkedVnets[0].resourceGroupName, 'Microsoft.Network/virtualNetworks', privateDnsZoneObject.linkedVnets[0].vnetName)

}

]

tags: config.tags

}

}

module containerAppServiceIdentity 'br/public:avm/res/managed-identity/user-assigned-identity:0.4.0' = {

name: 'deploy-${managedEnvironmentObject.name}-identity'

params: {

name: 'uami-${managedEnvironmentObject.name}'

location: config.location

tags: config.tags

}

}

var containerRegistryObject = config[env].containerRegistry

module acr 'br/public:avm/res/container-registry/registry:0.5.1' = {

scope: resourceGroup(containerRegistryObject.resourceGroupName)

name: 'deploy-${containerRegistryObject.name}-${timeStamp}'

params: {

// Required parameters

name: containerRegistryObject.name

// Non-required parameters

location: config.location

acrAdminUserEnabled: false

acrSku: containerRegistryObject.sku

azureADAuthenticationAsArmPolicyStatus: 'enabled'

exportPolicyStatus: 'enabled'

privateEndpoints: [

{

privateDnsZoneGroup: {

privateDnsZoneGroupConfigs: [

{

privateDnsZoneResourceId: containerRegistryObject.privateDnsZoneResourceId

}

]

}

subnetResourceId: containerRegistryObject.subnetResourceId

}

]

roleAssignments: [

{

principalId: containerAppServiceIdentity.outputs.principalId

principalType: 'ServicePrincipal'

roleDefinitionIdOrName: acrPullRole

}

]

quarantinePolicyStatus: containerRegistryObject.quarantinePolicyStatus

replications: []

softDeletePolicyDays: 7

softDeletePolicyStatus: 'disabled'

trustPolicyStatus: 'enabled'

}

}

Deploying the Container Apps

Now that all the Container App Environment resources have been deployed, development teams are enabled to deploy Container Apps into this existing environment. All resources are on the private network and need to be accessed using a solution like Azure VPN for example.

Developers can now securely deploy Container Apps using the following flow:

- Build and Push Containers to Azure Container Registry.

- Add secrets to Azure Key Vault.

- Deploy Azure Container Apps into the existing private Container Apps Environment and referencing container images in the registry.

- Access the Azure Container App on the private network using the FQDN.

Example yaml pipelines for the deployment of backend and frontend containers can be found below:

For example the deploy-album-api.yml below has stages for building and deploying the container apps. Due to limitations with the built in Azure DevOps task for Container Apps deployment, I prefer to use a bicep deployment, which keeps secret deployment and managed identity deployment clean.

name: deploy-album-api

parameters:

- name: environment

displayName: Choose the environment to deploy

type: string

default: dev

trigger: none

pool:

vmImage: ubuntu-latest

variables:

- template: ../variables/env-${{ parameters.environment }}.yml

- name: dockerfile

value: "$(Build.SourcesDirectory)/src/album-api/src/Dockerfile"

- name: containerName

value: "album-api"

stages:

- stage: Build

displayName: Build stage

jobs:

- job: Build

displayName: Build and push job

steps:

- task: Docker@2

displayName: Login to ACR

inputs:

command: login

containerRegistry: ${{ variables.containerRegistryConnection }}

- task: Docker@2

displayName: Build image

inputs:

command: build

repository: "${{ variables.repository }}/${{ variables.containerName }}"

dockerfile: ${{ variables.dockerfile }}

tags: $(Build.BuildId)

- task: Docker@2

displayName: Push image

inputs:

command: push

repository: "${{ variables.repository }}/${{ variables.containerName }}"

tags: $(Build.BuildId)

- stage: Deploy

displayName: Deploy to containerapp

jobs:

- job: Deploy

steps:

- task: AzurePowerShell@5

displayName: Deploy bicep

inputs:

azurePowerShellVersion: LatestVersion

azureSubscription: ${{ variables.serviceConnectionName }}

pwsh: true

ScriptType: InlineScript

Inline: |

$Parameters = @{

Name = "Deployment-$(Build.BuildId)"

ResourceGroupName = "$(resourceGroupName)"

TemplateFile = "$(srcRootFolder)/main.bicep"

env = "${{ parameters.environment }}"

imageBuild = "${{ variables.acrName }}.azurecr.io/${{ variables.repository }}/${{ variables.containerName }}:$(Build.BuildId)"

containerName = "${{ variables.containerName }}"

Verbose = $True

}

New-AzResourceGroupDeployment @Parameters

The deployment takes inputs from the following files:

- Infrastructure configuration from configs/aca.jsonc

- Pipeline variables from variables/env-dev.yml file.

The infrastructure deployment is defined in main.bicep as seen below.

param config object = loadJsonContent('../configs/aca.jsonc')

param env string

param containerName string

param imageBuild string = ''

param location string = resourceGroup().location

@description('Suffix to append to deployment names, defaults to MMddHHmmss')

param timeStamp string = utcNow('MMddHHmmss')

// Deploy the containerApp

var containerAppDeploymentObject = config[env].containerAppDeployment[containerName]

module containerApp 'br/public:avm/res/app/container-app:0.11.0' = {

name: 'deploy-${containerAppDeploymentObject.name}-${timeStamp}'

params: {

// Required parameters

name: containerAppDeploymentObject.name

containers: [

{

// image: !empty(image) ? image : containerAppDeploymentObject.imageName

image: imageBuild

name: containerAppDeploymentObject.name

env: containerAppDeploymentObject.?env ?? []

volumeMounts: containerAppDeploymentObject.?volumeMounts ?? []

probes: containerAppDeploymentObject.?probes ?? []

resources: {

cpu: json(containerAppDeploymentObject.containerCpuCoreCount)

memory: containerAppDeploymentObject.containerMemory

}

}

]

scaleMinReplicas: containerAppDeploymentObject.containerMinReplicas

scaleMaxReplicas: containerAppDeploymentObject.containerMaxReplicas

volumes: containerAppDeploymentObject.?volumes ?? []

registries: [

{

server: '${containerAppDeploymentObject.acrName}.azurecr.io'

identity: containerAppDeploymentObject.userAssignedIdentityId

}

]

environmentResourceId: containerAppDeploymentObject.managedEnvironmentId

// Non-required parameters

location: location

disableIngress: containerAppDeploymentObject.?disableIngress ?? false

ingressAllowInsecure: containerAppDeploymentObject.?ingressAllowInsecure ?? false

ingressExternal: containerAppDeploymentObject.?external ?? false

ingressTargetPort: containerAppDeploymentObject.targetPort

ingressTransport: 'auto'

corsPolicy: {

allowedOrigins: union([ 'https://portal.azure.com', 'https://ms.portal.azure.com' ], containerAppDeploymentObject.allowedOrigins)

}

dapr: containerAppDeploymentObject.?dapr ?? { enabled: false }

managedIdentities: {

userAssignedResourceIds: [

containerAppDeploymentObject.userAssignedIdentityId

]

}

secrets: {

secureList: containerAppDeploymentObject.?secrets ?? []

}

}

}

Making Container Apps available publicly

As a result of Container Apps being only deployed to the internal VNet with a registration in the private DNS zone. The Container App endpoints can be made available publicly by using Azure Application Gateway or Front Door and configuring the backend pool as the private FQDN of the Azure Container App.